[See also:

POST,

PUT,

DELETE]

A while ago I

wrote a series of posts on REST protocol handling with Spring 3. Now it's time to look at

handling REST with Lift - the web framework for Scala.

I have just started my adventure with Lift, so bear with me if something is not optimal (although I did my best to make sure it is) - and please point it out.

In this article - handle GET requests

In this article I will show you how to handle GET requests using Lift. You can look at the

corresponding Spring 3 post. For REST newbies I should recommend those two articles:

The means

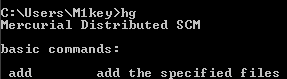

Normally I code in Eclipse, but this time I decided to use

IntelliJ IDEA since it has a free community edition now and in my opinion it handles Scala better than the plugin available for Eclipse. You can

download IntelliJ IDEA (I got version 9.0.2), but if you want to stick to Eclipse (or Notepad), it's fine.

Should you choose IntelliJ IDEA, you're going to have to (well, not really, but since you are using an IDE...) get the Scala plugin (which you should do through File -> Settings -> Plugins -> Available).

What you require is

Maven.

Also, I'm going to use Scala version 2.7.3 and Lift 1.0.

Create a project from archetype

I leave this step up to you - how you want to use Maven is really up to you (via IntelliJ IDEA, Eclipse, command line?). The archetype you should use is:

net.liftweb:lift-archetype-blank:RELEASE

You should check your pom.xml file. If you got Scala version 2.7.1 (or any other than 2.7.3), you should update it to 2.7.3.

<properties>

<scala.version>2.7.3</scala.version>

</properties>

You should make sure your lift-webkit version is 1.0:

<dependency>

<groupId>net.liftweb</groupId>

<artifactId>lift-webkit</artifactId>

<version>1.0</version>

</dependency>

Another problem you might get is the DTD location in your web.xml file.

Wrong:

http://java.sun.com/j2ee/dtds/web-app_2_3.dtd

Correct:

http://java.sun.com/dtd/web-app_2_3.dtd

When you build this project (call a Maven target jetty:run), you might get an error:

[WARNING] found : java.lang.String("/")

[WARNING] required:

net.liftweb.sitemap.Loc.Link[net.liftweb.sitemap.NullLocParams]

[WARNING] (Menu(Loc("Home", "/", "Home"))

That's a problem with your Boot.scala file. It can be easily fixed by replacing "/" with

List("/")

Or delete the whole SiteMap thing altogether, we won't need it in this project.

Business logic

This is not really that important - in your real life application you will replace this code with something that actually does something useful. Anyway, please take a look:

package me.m1key.rest

import me.m1key.model.Dog

import net.liftweb.util.{Full, Empty, Box}

import net.liftweb.http.{InMemoryResponse, LiftResponse}

object DogsRestService {

def getDog(dogId: String): Box[LiftResponse] = dogId match {

case "1" => {

val dog: Dog = new Dog("1", "Sega")

return Full(InMemoryResponse(dog.toXml.toString.getBytes("UTF-8"), List("Content-Type" -> "text/xml"), Nil, 200))

}

case _ => return Empty

}

}

It is a Scala object so that we don't need an instance of it. It defines one method, getDog by ID, and has a very dummy implementation. The interesting part is this line:

return Full(InMemoryResponse(dog.toXml.toString.getBytes("UTF-8"), List("Content-Type" -> "text/xml"), Nil, 200))

Lift defines

Full/

Empty concept. This is similar to

Scala's native Option: Some/None concept (it's about avoiding nulls). If your rest handling method returns Empty, then the client gets a 404 error. Otherwise, you must return a Full containing

InMemoryResponse.

InMemoryResponse takes four parameters:

- The actual content of the response

- Headers

- Cookies

- HTTP code to return to the client

Scala XML herding

Perhaps you noticed this call in the previous code sample:

dog.toXml

Scala has pretty cool XML support. Here's the actual Dog class.

package me.m1key.model

class Dog(id: String, name: String) {

def toXml =

<dog>

<id>{id}</id>

<name>{name}</name>

</dog>

}

The toXml method returns a

scala.xml.Elem. Note the

Expression Language-like usage of the

id and

name properties.

Let's see how we can test it.

Test it with JUnit 3

Why JUnit 3? Well, that's what the archetype gives you out of the box. If you don't like it, you can use specs. Here's my article on

how to use the Specs library in Eclipse.

package me.m1key.model

import junit.framework.{TestCase, TestSuite, Test}

import junit.framework.Assert._

object DogTest {

def suite: Test = {

val suite = new TestSuite(classOf[DogTest]);

suite

}

def main(args : Array[String]) {

junit.textui.TestRunner.run(suite);

}

}

/**

* Unit test.

*/

class DogTest extends TestCase("dog") {

val dog: Dog = new Dog("1", "Sega")

def testDogToXmlCorrectName = {

assertEquals("Sega", (dog.toXml \ "name").text)

}

}

See how I am using the Scala way of accessing data in an XML document (line 25.)?

Handling REST requests

Now let's see

how our DogRestService.getDog method can be called.

You put proper code in the Boot.scala file (it's the bootstrap code that is called on application start up).

package bootstrap.liftweb

import me.m1key.rest.DogsRestService

import net.liftweb.http.{GetRequest, RequestType, Req, LiftRules}

/**

* A class that's instantiated early and run. It allows the application

* to modify lift's environment

*/

class Boot {

def boot {

// where to search snippet

LiftRules.addToPackages("me.m1key")

// LiftRules.dispatch.append

LiftRules.statelessDispatchTable.append {

case Req(List("rest", "dogs", dogId), _, GetRequest) =>

() => DogsRestService.getDog(dogId)

}

}

}

Let's analyze it line by line.

You must tell Lift where (in which packages) to look for views and templates and snippets etc.:

LiftRules.addToPackages("me.m1key")

This is how you would add a new rewriting rule if you wanted to have access to the

S object as well ass

LiftSession:

// LiftRules.dispatch.append {

Next, you must add a new URL rewriting rule:

LiftRules.statelessDispatchTable.append {

Create a new rule:

case Req(List("rest", "dogs", dogId), _, GetRequest) =>

The List("rest", "dogs", dogId) part means that we expect a URL in this form:

/rest/dogs/1

The 1 will be assigned to dogId variable (see

Scala Pattern Matching).

The second parameter (left blank) is the suffix, and the third one specifies that we only want to handle GET requests.

The declaration of this looks a bit confusing (it did to me), so it helps to realize that it is a partial function.

() => DogsRestService.getDog(dogId)

And that's all you need!

Now just run it (jetty:run) in the browser (http://localhost:8080/rest/dogs/1) and see for yourself.

<dog>

<id>1</id>

<name>Sega</name>

</dog>

Summary

In this article I showed you how to handle GET requests with the Lift framework, how to do a bit of unit testing and how to use redirection rules.

At this moment I don't know whether we can simulate the requests (like it is possible with Spring) from integration tests.

Download source code for this article

Note

Lift 2.0 has just been released.

They claim that it has better REST support. As soon as more is available on this topic, I will write an article on it as well.